Open-source game engine Godot is facing mounting strain as a surge of AI-generated code submissions overwhelms volunteer maintainers, raising concerns about quality control, contributor accountability and the long-term sustainability of community-driven software projects.

Core developers behind the free and open-source engine say they are grappling with a wave of pull requests that appear to have been produced with the help of generative artificial intelligence tools. While such tools can accelerate coding, maintainers argue that many submissions show limited understanding of the engine’s architecture, introducing errors, redundancies and security risks that require extensive review and correction.

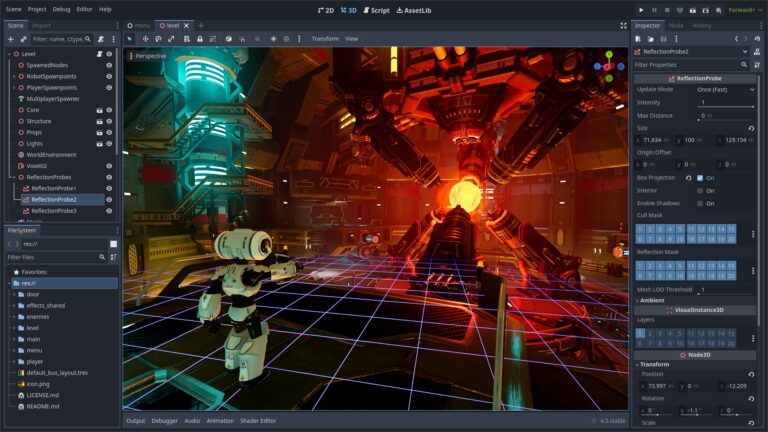

Godot, widely used by independent developers and small studios as an alternative to proprietary engines such as Unity and Unreal Engine, operates under an open governance model. Its source code is publicly available, and contributions from volunteers form a central part of its development process. That openness, long regarded as a strength, is now creating new pressures.

Developers involved in reviewing contributions have described a noticeable shift in the pattern of submissions over the past year. Instead of carefully scoped improvements or bug fixes from contributors familiar with the codebase, maintainers report receiving large patches filled with boilerplate-style changes, inconsistent naming conventions and logic that fails to integrate cleanly with existing systems. Some submissions replicate functionality that already exists or propose sweeping refactors without a clear rationale.

Concerns have been voiced publicly by senior contributors who say the review burden has grown sharply. They argue that reviewing poorly understood AI-assisted code can take longer than writing the fix from scratch. Volunteer time, already limited, is being diverted to filtering out low-quality contributions rather than advancing planned features and stability improvements.

Godot’s popularity has risen following turbulence in the game development ecosystem. When Unity announced controversial pricing changes tied to game installs in 2023, many developers began exploring alternatives. Godot saw a surge in downloads, community engagement and financial backing through donations and sponsorships. That influx of interest also expanded its contributor base, bringing both experienced programmers and newcomers eager to participate.

Generative AI coding assistants, powered by large language models, have become commonplace during the same period. Tools integrated into code editors can produce entire functions or suggest architectural changes based on natural language prompts. Technology companies promoting these tools argue they enhance productivity and lower barriers to entry for aspiring developers. Yet open-source communities are discovering unintended consequences.

Experts in software engineering note that AI-generated code often reflects patterns present in training data without full contextual awareness. While such output may appear syntactically correct, it can misalign with a project’s specific style guidelines, performance constraints or security standards. For complex systems like a game engine, subtle inconsistencies can cascade into significant bugs.

Godot’s maintainers have not banned AI-assisted contributions outright. Instead, discussions within the community have centred on reinforcing contribution guidelines and encouraging submitters to demonstrate understanding of their changes. Some contributors have suggested requiring more detailed explanations in pull requests, comprehensive testing and stricter adherence to coding standards before code is considered for merging.

The debate also touches on broader philosophical questions about open-source collaboration. Projects like Godot depend on goodwill, transparency and distributed responsibility. If contributors rely heavily on automated tools without engaging deeply with the codebase, maintainers worry that the culture of shared learning and mentorship could erode. At the same time, excluding newcomers or dismissing AI tools entirely risks alienating developers who see such technologies as integral to modern workflows.

Industry observers point out that the challenge is not unique to Godot. Maintainers across popular open-source repositories on platforms such as GitHub have reported spikes in AI-influenced submissions. Some projects have introduced labelling systems to flag AI-generated patches, while others have tightened review processes or implemented contribution quotas to manage workload.

Financial support, though improved for Godot compared with earlier years, does not eliminate the bottleneck. The engine is backed by the Godot Foundation, which coordinates fundraising and strategic direction, but much of the day-to-day code review remains volunteer-driven. Expanding the pool of trusted maintainers is one option under discussion, though onboarding new reviewers requires time and careful vetting.

For developers who rely on Godot to build commercial titles, stability and reliability are paramount. Studios evaluating engines weigh not only features but also governance and long-term viability. Persistent friction in the contribution pipeline could slow feature rollouts or delay critical fixes, affecting user confidence.

Some community members argue that the current turbulence reflects growing pains rather than systemic decline. They note that every widely adopted open-source project must evolve its processes as scale increases. Clearer documentation, automated testing pipelines and stronger mentorship for first-time contributors could help filter out superficial submissions while preserving openness.

Notice an issue?

Arabian Post strives to deliver the most accurate and reliable information to its readers. If you believe you have identified an error or inconsistency in this article, please don’t hesitate to contact our editorial team at editor[at]thearabianpost[dot]com. We are committed to promptly addressing any concerns and ensuring the highest level of journalistic integrity.